By now I'm sure everyone reading this has heard about AI and the potential it has to disrupt everything.I thought I would put it to the test an try it out for texture generation.

Texture generation is an area I have done a lot of work in over the years having used substance designer/painter, Krita, Gimp, Blender and Unreal material nodes as well as many other programs I'm probably forgetting.

It is a task that takes a significant amount of time, especially with the 4/5 different maps PBR requires. Often artists will simply skip many of these steps, I have seen a lot of albedo maps plugged into the roughness slot in a material node setup.

AI promises to dramatically improve the speed of generation of textures and materials, so I decided to try it out and see how good a result I could get.

Stable diffusion

https://github.com/Stability-AI/StableDiffusion

Rather than wrangling python libraries we can clone the stable-diffusion-webui project to use the full suite of stable diffusion tools from a GUI that runs locally.

https://github.com/AUTOMATIC1111/stable-diffusion-webui

These repos contain documentation for how to get them running, but if you're familiar with git it's pretty easy.

Texture Generation

Out the gate, stable diffusion does not do a great job of generating textures. Even with some prompt wrangling I couldn't seem to get anything resembling usable textures.

|

| cobblestones, game texture, game asset, photorealistic, photography, 8K uhd |

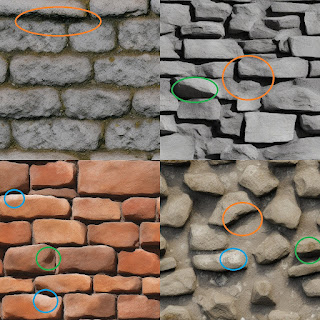

Switching to a fine-tuned model improves the results somewhat. In this case I used the Epic Diffusion model which I had previously used for generating images for TTRPGs.

Results on the finetune are definitely better than the raw model. However, anyone who understands PBR and albedo will very quickly see these images are not useable. Each contains a lot of lighitng information.

- The highlights (blue circles) could be removed in software relatively easily, but detail will be lost in those areas.

- There are very obvious directional light cues (green circles). These are difficult to remove without normal information and will often be interpreted as height differences by texture generation tools. Whilst these are theoretically removable with inverse rendering techiques, we are working with abitrary textures so approximating normal maps for the purpose of light removal is unlikely to provide good results.

- The worst problem with these images is the hard shadows most of them exhibit. These are pretty much impossible to remove without knowing the underlying geometry and lighting direction.

Texture - diffusion

Here is a link to the model card

If you are using the AUTOMATIC1111 UI then you will need to download the cpkt version of the texture-diffusion model. It is available from here. If you have issues with getting the texture diffusion model to work with the UI, then updating the repo and submodules should fix this.

The results are significantly better than the other options:

|

| pbr, castle brick wall |

By default Stable diffusion generates textures at 512x512. There are 3 options for upscaling that; generating at a higher resolution, using the hi-res fix option, or upscaling the result.

|

| pbr, brick wall |

The first two options tended to create results like that shown above, whilst not bad by any means, the detail doesn't really improve, you just get more of it.

Upscaling the resultant 512x512 to 2048x2048 seems to be the best option. As with other applications the LDSR upscaler tends to provide the best results, it is a slow model but well worth the wait.

|

| Original 512x512 |

|

| upscaled 2048x2048 using LDSR |

Materials

Out of the box texture-diffusion provides albedo maps. However for PBR rendering we need more than that. preferably we should have, height, normal and roughness maps. Metalness too if we're generating mixed material texture sets.

I haven't tested using texture-diffusion to generate these images, it may work. However there is an easier solution that is guaranteed to work and is easy to use.

Materialize by bounding box software

Materialize is a machine learning powered tool that is used to generate PBR material maps from photos, for our use case we can use it generate the other maps from our albedo.

The software is free to use and open source too, which is bonkers considering the power this tool provides.

|

| The materialize software interface |

The results this tool provides are amazing. From importing the albedo image into materialize to the final texture set took maybe five minutes. it could take less than that if you don't fiddle with every slider to see what they do.

That's insane!

The longest part of creating the above material was waiting for the AI upscaler to finish during the stable diffusion step.

Conclusion

Results

No comments:

Post a Comment